There are a lot of times when data analysts receive dirty data - filled with errors an no structure. The data could contain errors, missing values, poor organization, typos or spelling mistakes. In order to analyze it, we need to modify it in some way.

%load_ext sql

import os

host = "localhost"

database = "analysis"

user = os.getenv('SQL_USER')

password = os.getenv('SQL_PASSWORD')

connection_string = f"postgresql://{user}:{password}@{host}/{database}"

%sql $connection_string'Connected: postgres@analysis'Importing the Data

We’ll use a directory of U.S. meat, poultry, and egg producers. The Food Safety and Inspection Service (FSIS), an agency within the U.S. Department of Agriculture, compiles and updates this database every month. The FSIS is responsible for inspecting animals and food at more than 6,000 meat processing plants, slaughterhouses, farms, and the like.

%%sql

CREATE TABLE meat_poultry_egg_inspect (

est_number varchar(50) CONSTRAINT est_number_key PRIMARY KEY,

company varchar(100),

street varchar(100),

city varchar(30),

st varchar(2),

zip varchar(5),

phone varchar(14),

grant_date date,

activities text,

dbas text

);

COPY meat_poultry_egg_inspect

FROM 'C:\Users\Public\postgresql_practice\practical-sql-master\Chapter_09\MPI_Directory_by_Establishment_Name.csv'

WITH (FORMAT CSV, HEADER, DELIMITER ',');

CREATE INDEX company_idx ON meat_poultry_egg_inspect (company); * postgresql://postgres:***@localhost/analysis

Done.

6287 rows affected.

Done.

[]%%sql

SELECT * FROM meat_poultry_egg_inspect

LIMIT 10; * postgresql://postgres:***@localhost/analysis

10 rows affected.|

est_number |

company |

street |

city |

st |

zip |

phone |

grant_date |

activities |

dbas |

|---|---|---|---|---|---|---|---|---|---|

|

M46712+P46712 |

121 In-Flight Catering LLC |

45 Rason Road |

Inwood |

NY |

11096 |

|

2016-06-27 |

Meat Processing, Poultry Processing |

None |

|

M13561+P13561 |

165368 C. Corporation |

5617 Hoover Street, Suite A |

Houston |

TX |

77092 |

|

2014-06-30 |

Meat Processing |

Long Phung Food Products |

|

M46724+P46724 |

1732 Meats LLC |

6250 Baltimore Pike |

Yeadon |

PA |

19050 |

|

2015-09-08 |

Meat Processing, Poultry Processing |

None |

|

M7067+P7067 |

1st Original Texas Chili Company, Inc. |

3313 N. Jones Street |

Fort Worth |

TX |

76106 |

|

2003-11-28 |

Meat Processing |

T.C. Foods; Texas Chili |

|

M48225 |

290 West Bar & Grill |

12013 HWY 290 West |

Austin |

TX |

78737 |

|

2016-11-01 |

Meat Processing |

Afia Foods |

|

M17530+P17530 |

3 Little Pigs LLC |

625 South Main Street |

Wilkes Barre |

PA |

18701 |

|

2016-03-23 |

Meat Processing, Poultry Processing |

House of Bricks |

|

M19035+P19035 |

3-A Enterprises |

P.O. Box 592 |

Carroll |

IA |

51401 |

|

1994-06-15 |

Meat Processing, Poultry Processing |

Party Time Fundraising |

|

M45790 |

3282 Beaver Meadow Road LLC |

3282 Beaver Meadow Road |

Sharon |

VT |

5065 |

|

2016-03-10 |

Meat Processing, Meat Slaughter |

Royalton Meats; Sharon Beef |

|

M45377 |

3D Meats, LLC |

14740 E. Lincoln Way |

Dalton |

OH |

44618 |

|

2015-02-20 |

Meat Processing, Meat Slaughter |

None |

|

M22017+P22017+V22017 |

4 Frendz Meat Market |

1342 Bridge St. |

Clarkston |

WA |

99403 |

|

2010-08-19 |

Animal Foods Inspection, Meat Processing, Poultry Processing, Voluntary Processing - Meat |

Southfork Sausage |

The meat_poultry_egg_inspect table has 10 columns. We add a natural primary key constraint to the est_number column, which contains a unique value for each row that identifies the establishment. Most of the other columns relate to the company’s name and location. We set the activities and dbas columns to text data type since some of the strings in the columns are thousands of characters long. We create an index on the company column to speed up searches for particular companies.

Let’s use the count() aggregate function to check how many rows are in the table.

%%sql

SELECT count(*) FROM meat_poultry_egg_inspect; * postgresql://postgres:***@localhost/analysis

1 rows affected.| count |

|---|

| 6287 |

There are 6,287 rows in the table.

Interviewing the Data Set

Let’s interview the data set to expose its limitations and raise some questions.

Checking for Duplicates

We might assume that each company in each row operates at a distinct address. But it’s never safe to assume anything in data analysis, so let’s check if that is true.

%%sql

SELECT company, street, city, st, count(*) AS address_count

FROM meat_poultry_egg_inspect

GROUP BY company, street, city, st

HAVING count(*) > 1

ORDER BY company, street, city, st; * postgresql://postgres:***@localhost/analysis

23 rows affected.| company | street | city | st | address_count |

|---|---|---|---|---|

| Acre Station Meat Farm | 17076 Hwy 32 N | Pinetown | NC | 2 |

| Beltex Corporation | 3801 North Grove Street | Fort Worth | TX | 2 |

| Cloverleaf Cold Storage | 111 Imperial Drive | Sanford | NC | 2 |

| Crete Core Ingredients, LLC | 2220 County Road I | Crete | NE | 2 |

| Crider, Inc. | 1 Plant Avenue | Stillmore | GA | 3 |

| Dimension Marketing & Sales, Inc. | 386 West 9400 South | Sandy | UT | 2 |

| Foster Poultry Farms, A California Corporation | 6648 Highway 15 North | Farmerville | LA | 2 |

| Freezer & Dry Storage, LLC | 21740 Trolley Industrial Drive | Taylor | MI | 2 |

| JBS Souderton Inc. | 249 Allentown Road | Souderton | PA | 2 |

| KB Poultry Processing LLC | 15024 Sandstone Dr. | Utica | MN | 2 |

| Lakeside Refrigerated Services | 2600 Oldmans Creek Road | Swedesboro | NJ | 2 |

| Liberty Cold Storage | 1310 Remington Blvd. | Bolingbrook | IL | 2 |

| M.G. Waldbaum Company | 120 Tower Street | Gaylord | MN | 3 |

| Midway International Logistics LLC | 948 Bradley Street | Watertown | NY | 2 |

| Nordic Logistics and Warehousing, LLC | 220 Nordic Way | Pooler | GA | 2 |

| OK Foods, Inc. | 3921 Reed Lane | Fort Smith | AR | 2 |

| Pacific Produce Corporation | 220 East Harmon Industrial Park Road | Tamuning | GU | 2 |

| Payless Distribution Center (PDC) | 370 Mendioka Street | Dededo | GU | 2 |

| Piatkowski Riteway Meats Inc. | 3555 Witmer Road | Niagara Falls | NY | 2 |

| Preferred Freezer Services | 2700 Trade Street | Chesapeake | VA | 2 |

| THE AMERICAN PIG | 25 MEADOW ROAD | ASHEVILLE | NC | 2 |

| The Classic Jerky Company | 21655 Trolley Industrial Drive | Taylor | MI | 2 |

| United States Cold Storage Inc. | 11801 NW 102 Road | Medley | FL | 2 |

Here we grouped companies by unique combinations of the company, street, city, and st columns. Then we use count(*), which returns the number of rows for each combination of those columns and gives it the alias address_count. Using the HAVING clause, we filter the results only to show cases where more than one row has the same combination of values. This should return all duplicate addresses for a company.

The query returns 23 rows, which means there are 23 cases where the same company is listed multiple times at the same address. This might not necessarily be a problem. There may be valid reasons for a company to appear multiple times at the same address. For example, tow types of processing plants could exist with the same name. On the other hand, we may have found data entry errors. Either way, this is good to know for any conclusions in the future.

Checking for Missing Values

Let’s start checking for missing values by asking a basic question: how many of the meat, poultry, and egg processing companies are in each state? Finding out whether we have values from all states and whether any rows are missing a state code will serve as another useful check on the data.

%%sql

SELECT st, count(*) AS st_count

FROM meat_poultry_egg_inspect

GROUP BY st

ORDER BY st; * postgresql://postgres:***@localhost/analysis

57 rows affected.| st | st_count |

|---|---|

| AK | 17 |

| AL | 93 |

| AR | 87 |

| AS | 1 |

| AZ | 37 |

| CA | 666 |

| CO | 121 |

| CT | 55 |

| DC | 2 |

| DE | 22 |

| FL | 322 |

| GA | 206 |

| GU | 14 |

| HI | 47 |

| IA | 149 |

| ID | 38 |

| IL | 348 |

| IN | 82 |

| KS | 69 |

| KY | 110 |

| LA | 49 |

| MA | 101 |

| MD | 75 |

| ME | 24 |

| MI | 177 |

| MN | 160 |

| MO | 158 |

| MP | 4 |

| MS | 69 |

| MT | 23 |

| NC | 212 |

| ND | 22 |

| NE | 110 |

| NH | 18 |

| NJ | 244 |

| NM | 28 |

| NV | 35 |

| NY | 346 |

| OH | 186 |

| OK | 59 |

| OR | 86 |

| PA | 364 |

| PR | 84 |

| RI | 27 |

| SC | 55 |

| SD | 24 |

| TN | 113 |

| TX | 387 |

| UT | 71 |

| VA | 111 |

| VI | 2 |

| VT | 27 |

| WA | 139 |

| WI | 184 |

| WV | 23 |

| WY | 1 |

| None | 3 |

When you run the query, it tallies the number of times each state postal code st appears in the table.

But the result shows 57 states when there are only 50 states in the U.S. That’s because the data also incorporates U.S. territories.

The state with the highest number of establishments is Alaska (AK). However, the row at the bottom has a st of null and a count of 3. Let’s find out these null values using count() with GROUP BY.

%%sql

SELECT est_number, company, city, st, zip

FROM meat_poultry_egg_inspect

WHERE st IS NULL; * postgresql://postgres:***@localhost/analysis

3 rows affected.| est_number | company | city | st | zip |

|---|---|---|---|---|

| V18677A | Atlas Inspection, Inc. | Blaine | None | 55449 |

| M45319+P45319 | Hall-Namie Packing Company, Inc | None | None | 36671 |

| M263A+P263A+V263A | Jones Dairy Farm | None | None | 53538 |

These are the establishments with a null value in the st column.

Checking for Inconsistent Data Values

We can check for inconsistently entered data within a column by using GROUP BY with count(). Many of the 6,200 companies in our table are multiple locations owned by a few multinational food corporations, such as Cargill or Tyson Foods. To find out how many locations each company owns, we would try to count the values in the company column.

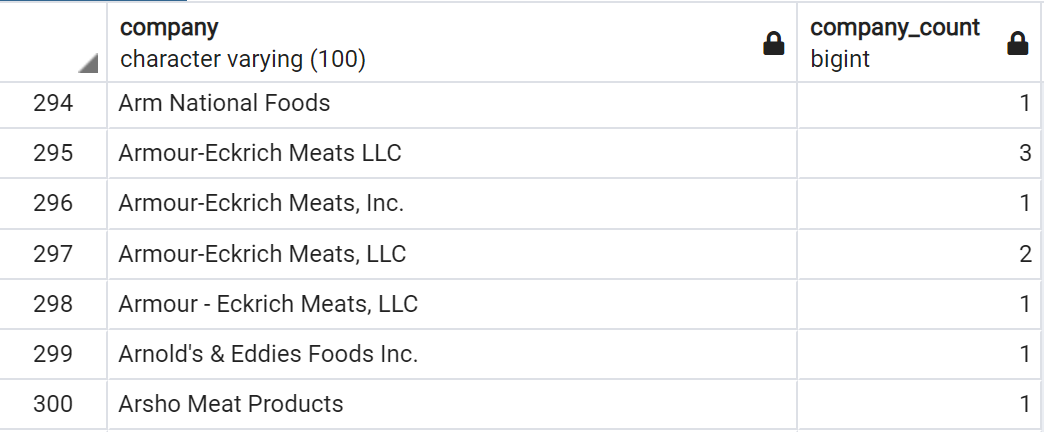

%%sql

SELECT company, count(*) AS company_count

FROM meat_poultry_egg_inspect

GROUP BY company

ORDER BY company ASC

LIMIT 20; * postgresql://postgres:***@localhost/analysis

20 rows affected.| company | company_count |

|---|---|

| 121 In-Flight Catering LLC | 1 |

| 165368 C. Corporation | 1 |

| 1732 Meats LLC | 1 |

| 1st Original Texas Chili Company, Inc. | 1 |

| 290 West Bar & Grill | 1 |

| 3-A Enterprises | 1 |

| 3 Little Pigs LLC | 1 |

| 3282 Beaver Meadow Road LLC | 1 |

| 3D Meats, LLC | 1 |

| 4-L Processing | 1 |

| 4 Frendz Meat Market | 1 |

| 41 Market | 1 |

| 412 Meat Processing Inc. | 1 |

| 458 1/2 South Broadway Meat Inc | 1 |

| 4G Meat Processing LLC | 1 |

| 50th State Poultry | 1 |

| 58 Place Seafood Inc. | 1 |

| 5th Generation Adams Farm | 1 |

| 701 Foods, Inc. | 1 |

| 814 Americas, Inc. | 1 |

Scrolling through the results reveals a number of cases in which a company’s name is spelled several different ways. For e.g., notice the entries for the Armour-Eckrich brand.

At least four different spellings are shown for seven establishments owned by the same company. It would help to standardize the names so all of the items counted or summed are grouped properly in case we perform any aggregation by company.

Checking for Malformed Values using length()

It’s important to check for unexpected values in a column that should be consistently formatted. For e.g., each entry in the zip column in the meat_poultry_egg_inspect table should be formatted in the style of U.S. zip codes with five digits. Some codes in our data set that start with 0 have lost the integer during conversion from one file format to another.

length() is a string function that counts the number of characters in a string. We combine length(), count() and GROUP BY to determine how may rows have five characters in the zip field and how many have a value other than five. To make it easy to scan the results, we use length() in the ORDER BY clause.

%%sql

SELECT length(zip), count(*) AS length_count

FROM meat_poultry_egg_inspect

GROUP BY length(zip)

ORDER BY length(zip) ASC; * postgresql://postgres:***@localhost/analysis

3 rows affected.| length | length_count |

|---|---|

| 3 | 86 |

| 4 | 496 |

| 5 | 5705 |

There are 496 zip codes that are four characters long and 86 are three characters long, which means these numbers originally had one and two leading zeros, respectively.

Using the WHERE clause, we can check which states these shortened zip codes correspond to.

%%sql

SELECT st, count(*) AS st_count

FROM meat_poultry_egg_inspect

WHERE length(zip) < 5

GROUP BY st

ORDER BY st ASC; * postgresql://postgres:***@localhost/analysis

9 rows affected.| st | st_count |

|---|---|

| CT | 55 |

| MA | 101 |

| ME | 24 |

| NH | 18 |

| NJ | 244 |

| PR | 84 |

| RI | 27 |

| VI | 2 |

| VT | 27 |

The result is what we’d expect. Usually the states in the Northeast region of the U.S. are where the zip codes start with 0.

So far, we need to correct the following issues in our data set: - Missing values for three rows in the st column - Inconsistent spelling of at least one company’s name - Inaccurate zip codes due to file conversion

Restoring Missing Values

Earlier we found three rows that don’t have a value in the st column. We can find the values we need with a quick online search.

%%sql

UPDATE meat_poultry_egg_inspect

SET st = 'MN'

WHERE est_number = 'V18677A';

UPDATE meat_poultry_egg_inspect

SET st = 'AL'

WHERE est_number = 'M54319+P45319';

UPDATE meat_poultry_egg_inspect

SET st = 'WI'

WHERE est_number = 'M263A+P263A+V263A'; * postgresql://postgres:***@localhost/analysis

1 rows affected.

0 rows affected.

1 rows affected.

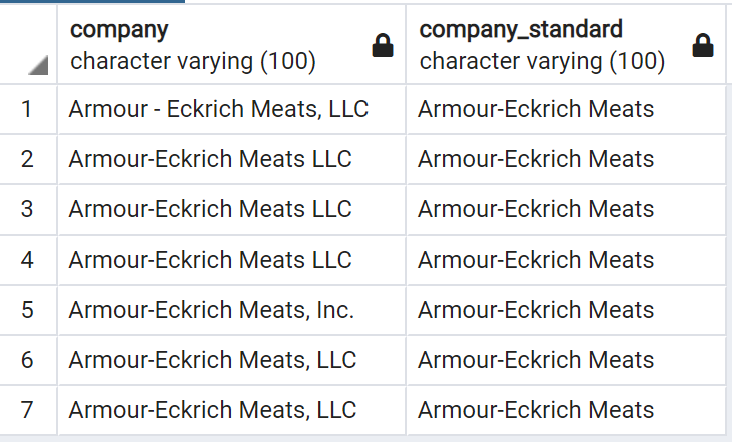

[]Updating Values for Consistency

We discovered several cases where a single company’s name was entered inconsistently. If we want to aggregate data by company name, such inconsistencies will hinder us from doing so.

We can standardize the spelling of a company name by using an UPDATE statement. To avoid tampering with the original data, we’ll copy the names in company into a new column, and work in the new column.

%%sql

ALTER TABLE meat_poultry_egg_inspect

ADD COLUMN company_standard varchar(100);

UPDATE meat_poultry_egg_inspect

SET company_standard = company; * postgresql://postgres:***@localhost/analysis

Done.

6287 rows affected.

[]Let’s say we want any name in company that contains the string Armour to appear in company_standard as Armour-Eckrich Meats. We can update all the rows matching the string Armour by using a WHERE clause.

You can see how the names have now been standardized. If we want to standardize other company names in the table, we would create an UPDATE statement for each case.

Repairing ZIP Codes using Concatenation

To fix our values in the zip codes column, we’ll use UPDATE in conjunction with the double-pip string operator ||, which performs concatenation. Concatenation combines two or more string or non-string values into one.

%%sql

UPDATE meat_poultry_egg_inspect

SET zip = '00' || zip

WHERE st IN ('PR', 'VI') AND length(zip) = 3;

UPDATE meat_poultry_egg_inspect

SET zip = '0' || zip

WHERE st IN('CT','MA','ME','NH','NJ','RI','VT') AND length(zip) = 4; * postgresql://postgres:***@localhost/analysis

86 rows affected.

496 rows affected.

[]Let’s check again with our earlier query.

%%sql

SELECT length(zip), count(*) AS length_count

FROM meat_poultry_egg_inspect

GROUP BY length(zip)

ORDER BY length(zip); * postgresql://postgres:***@localhost/analysis

1 rows affected.| length | length_count |

|---|---|

| 5 | 6287 |

All rows now have a five-digit zip code.

Updating Values Across Tables

Let’s say we’re setting an inspection date for each of the companies in our table. We want to do this by U.S. regions, such as Northeast, Pacific, and so on, but those regional designations don’t exist in our table. However, we can add a table from another data set that contains matching st state codes. Let’s begin with the New England region.

We’ll create a new table called state_regions.

%%sql

CREATE TABLE state_regions (

st varchar(2) CONSTRAINT st_key PRIMARY KEY,

region varchar(20) NOT NULL

);

COPY state_regions

FROM 'C:\Users\Public\postgresql_practice\practical-sql-master\Chapter_09\state_regions.csv'

WITH (FORMAT CSV, HEADER, DELIMITER ','); * postgresql://postgres:***@localhost/analysis

(psycopg2.errors.DuplicateTable) relation "state_regions" already exists

[SQL: CREATE TABLE state_regions (

st varchar(2) CONSTRAINT st_key PRIMARY KEY,

region varchar(20) NOT NULL

);]

(Background on this error at: https://sqlalche.me/e/14/f405)%%sql

SELECT * FROM state_regions; * postgresql://postgres:***@localhost/analysis

56 rows affected.| st | region |

|---|---|

| AK | Pacific |

| AL | East South Central |

| AR | West South Central |

| AS | Outlying Area |

| AZ | Mountain |

| CA | Pacific |

| CO | Mountain |

| CT | New England |

| DC | South Atlantic |

| DE | South Atlantic |

| FL | South Atlantic |

| GA | South Atlantic |

| GU | Outlying Area |

| HI | Pacific |

| IA | West North Central |

| ID | Mountain |

| IL | East North Central |

| IN | East North Central |

| KS | West North Central |

| KY | East South Central |

| LA | West South Central |

| MA | New England |

| MD | South Atlantic |

| ME | New England |

| MI | East North Central |

| MN | West North Central |

| MO | West North Central |

| MP | Outlying Area |

| MS | East South Central |

| MT | Mountain |

| NC | South Atlantic |

| ND | West North Central |

| NE | West North Central |

| NH | New England |

| NJ | Middle Atlantic |

| NM | Mountain |

| NV | Mountain |

| NY | Middle Atlantic |

| OH | East North Central |

| OK | West South Central |

| OR | Pacific |

| PA | Middle Atlantic |

| PR | Outlying Area |

| RI | New England |

| SC | South Atlantic |

| SD | West North Central |

| TN | East South Central |

| TX | West South Central |

| UT | Mountain |

| VA | South Atlantic |

| VI | Outlying Area |

| VT | New England |

| WA | Pacific |

| WI | East North Central |

| WV | South Atlantic |

| WY | Mountain |

Now let’s add a column for inspection dates to the meat_poultry_egg_inspect table.

%%sql

ALTER TABLE meat_poultry_egg_inspect ADD COLUMN inspection_date date;

UPDATE meat_poultry_egg_inspect inspect

SET inspection_date = '2021-12-01'

WHERE EXISTS (SELECT state_regions.region

FROM state_regions

WHERE inspect.st = state_regions.st

AND state_regions.region = 'New England'); * postgresql://postgres:***@localhost/analysis

Done.

252 rows affected.

[]%%sql

SELECT st, inspection_date

FROM meat_poultry_egg_inspect

GROUP BY st, inspection_date

ORDER BY st; * postgresql://postgres:***@localhost/analysis

57 rows affected.| st | inspection_date |

|---|---|

| AK | None |

| AL | None |

| AR | None |

| AS | None |

| AZ | None |

| CA | None |

| CO | None |

| CT | 2021-12-01 |

| DC | None |

| DE | None |

| FL | None |

| GA | None |

| GU | None |

| HI | None |

| IA | None |

| ID | None |

| IL | None |

| IN | None |

| KS | None |

| KY | None |

| LA | None |

| MA | 2021-12-01 |

| MD | None |

| ME | 2021-12-01 |

| MI | None |

| MN | None |

| MO | None |

| MP | None |

| MS | None |

| MT | None |

| NC | None |

| ND | None |

| NE | None |

| NH | 2021-12-01 |

| NJ | None |

| NM | None |

| NV | None |

| NY | None |

| OH | None |

| OK | None |

| OR | None |

| PA | None |

| PR | None |

| RI | 2021-12-01 |

| SC | None |

| SD | None |

| TN | None |

| TX | None |

| UT | None |

| VA | None |

| VI | None |

| VT | 2021-12-01 |

| WA | None |

| WI | None |

| WV | None |

| WY | None |

| None | None |

We can see that the inspection dates are filled for the states in the New England region. This way we can fill in dates for the other regions as well.